¶ Comparing Inpainting Methods in Shakker Online ComfyUI

Inpainting is the process of selectively modifying or "repainting" specific parts of an image while preserving the unmasked areas. This technique is widely used for tasks such as object removal, repairing damaged sections of an image, or creatively altering elements within a scene.

Shakker Online ComfyUI offers multiple inpainting methods that cater to varying needs and complexities. This blog compares the two primary methods:

-

VAE Encode (for Inpainting)

-

Set Latent Noise Mask

Additionally, we’ll explore advanced workflows incorporating Fooocus Inpainting and IPAdapter for more refined results.

Method 1: VAE Encode (for Inpainting)

Method 2: Set Latent Noise Mask

Comparison of VAE Encode and Set Latent Noise Mask

Advanced Tools for Enhanced Inpainting

Practical Application: Object Removal with Inpainting

Conclusion: Choosing the Right Inpainting Method

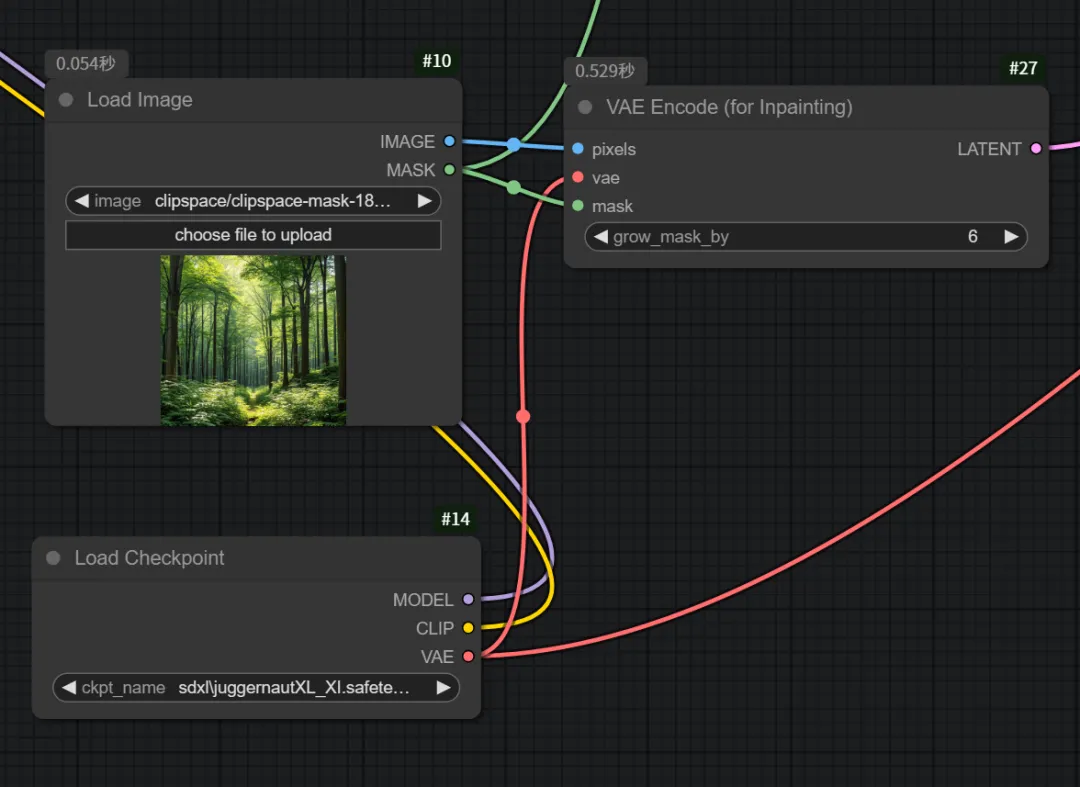

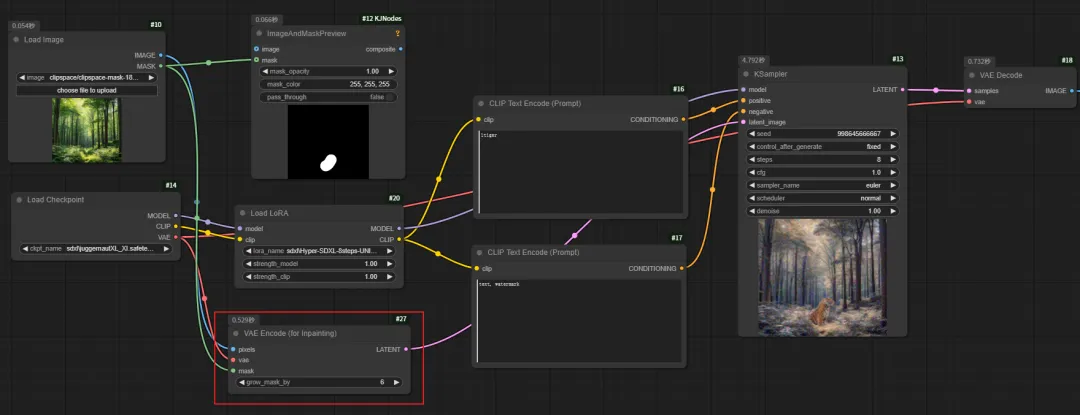

¶ Method 1: VAE Encode (for Inpainting)

¶ Overview

The VAE Encode (for Inpainting) method uses Variational Autoencoder (VAE) encoding to create a latent representation of the image and mask. This latent data is processed by the KSampler to generate the inpainted image.

¶ Workflow

-

Input Nodes:

-

pixels: Original image.

-

mask: The manually drawn area to modify.

-

vae: VAE model for latent encoding.

-

-

Process:

-

The masked image area is encoded as latent data.

-

This latent is passed to the KSampler, which generates the final inpainted image.

-

-

Key Features:

-

Considers the context of the surrounding image.

-

Allows adjustment of denoising strength to control blending with the original image.

-

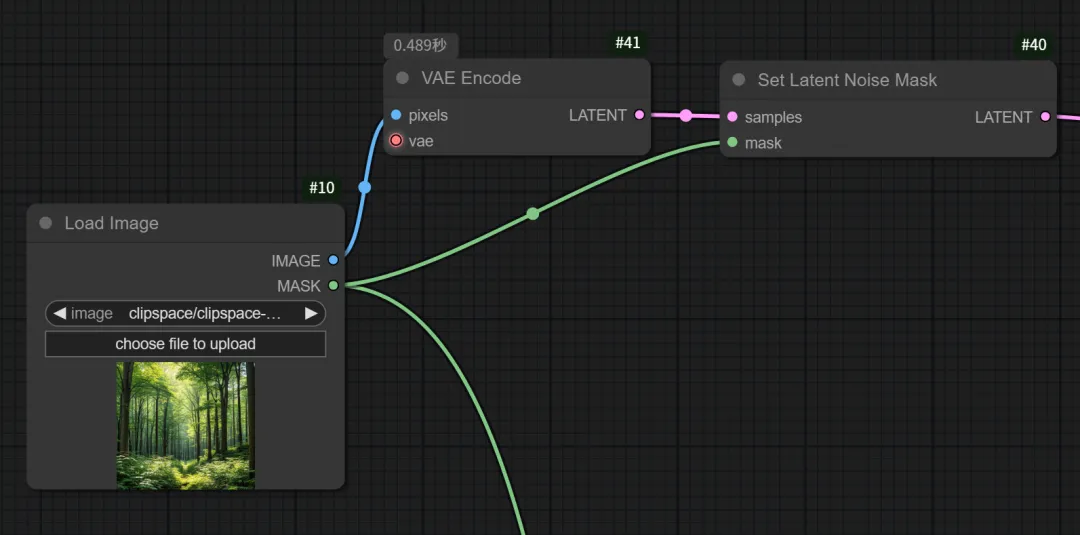

¶ Method 2: Set Latent Noise Mask

¶ Overview

The Set Latent Noise Mask method focuses on adding noise to the latent representation of the masked area. This noise drives the generation of new content while preserving the unmasked areas.

¶ Workflow

-

Input Nodes:

-

samples: Latent encoding of the original image.

-

mask: Manually drawn mask to define the inpainting area.

-

-

Process:

-

Noise is added to the masked latent area.

-

This noisy latent is passed to the KSampler for output generation.

-

-

Key Features:

-

Similar to VAE Encode (for Inpainting) in functionality.

-

Provides comparable results with natural blending of the masked and unmasked areas.

-

¶ Comparison of VAE Encode and Set Latent Noise Mask

| Feature | VAE Encode (for Inpainting) | Set Latent Noise Mask |

|---|---|---|

| Context Awareness | Considers surrounding image data. | Considers surrounding image data. |

| Workflow Complexity | Simple to implement. | Simple to implement. |

| Blending Quality | Excellent, with natural results. | Excellent, with natural results. |

| Denoising Control | Adjustable via KSampler. | Adjustable via KSampler. |

| Key Difference | Uses VAE encoding. | Adds noise to latent data. |

Both methods deliver high-quality results with seamless integration of the inpainted area into the original image.

¶ Advanced Tools for Enhanced Inpainting

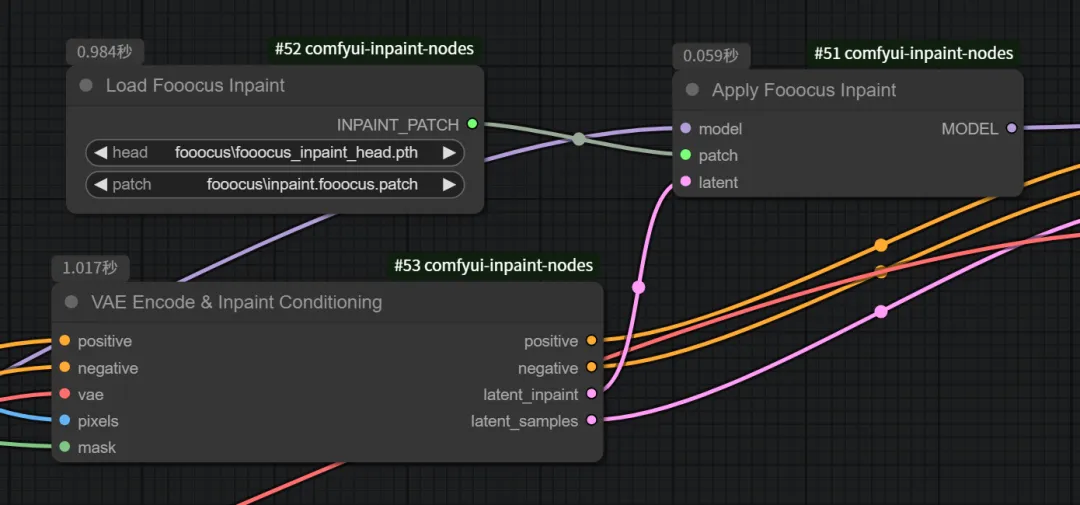

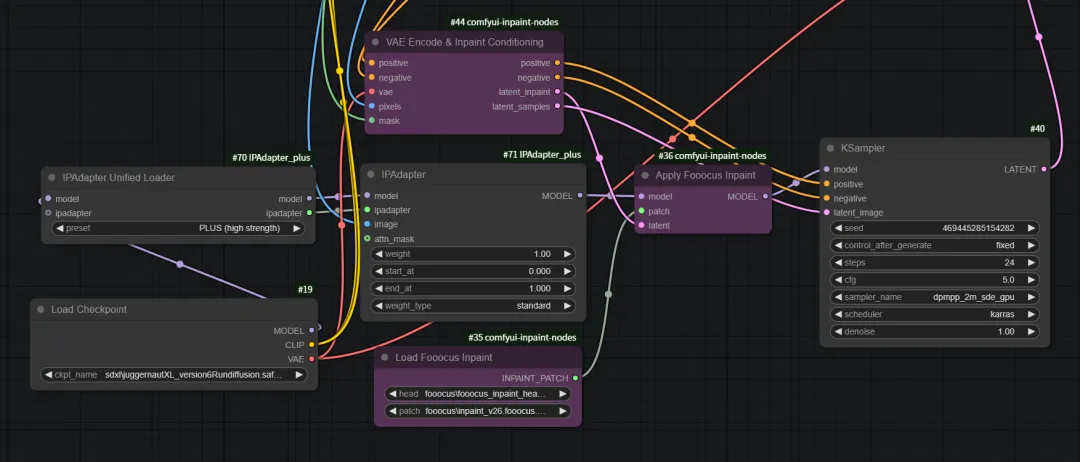

¶ Fooocus Inpainting

Fooocus leverages advanced machine learning to provide highly detailed and seamless inpainting results.

-

Advantages:

-

Intelligent blending of masked areas.

-

High visual quality with minimal artifacts.

-

Customizable parameters, such as patch models and LoRA weights, for precise control.

-

¶ IPAdapter for Style Consistency

IPAdapter ensures that the style of the inpainted area matches the unmasked portions of the image.

-

Placement in Workflow:

-

Can be added before or after Fooocus inpainting.

-

Minimal impact on performance but ensures stylistic coherence.

-

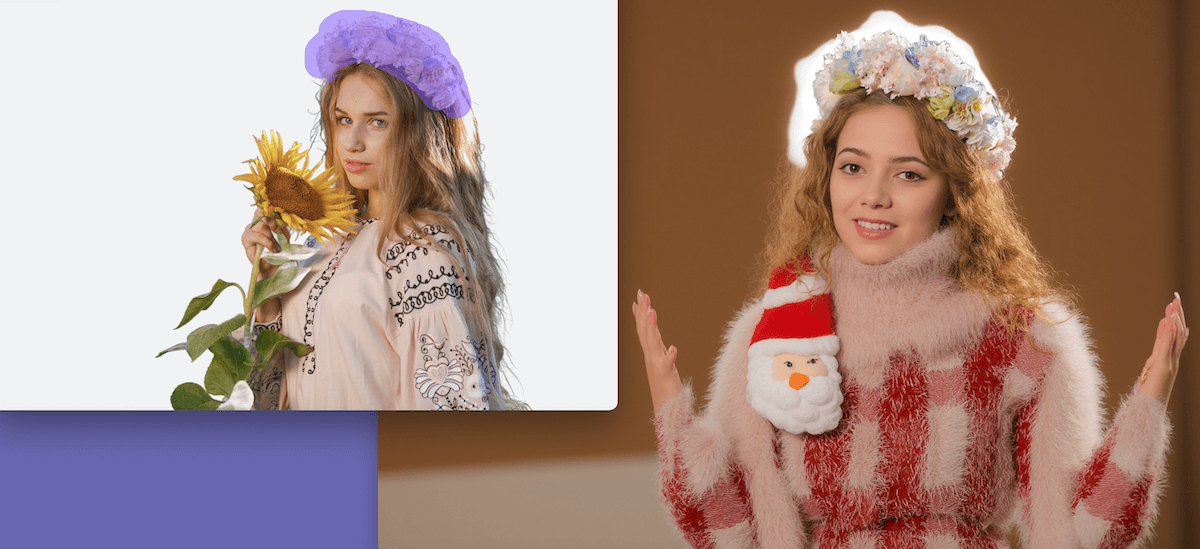

¶ Practical Application: Object Removal with Inpainting

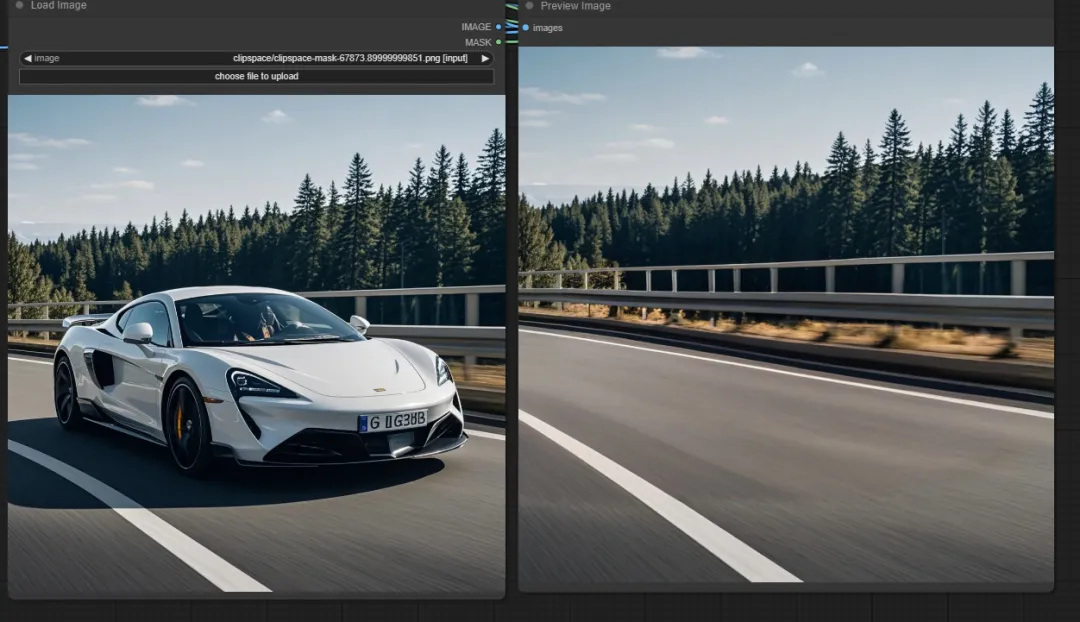

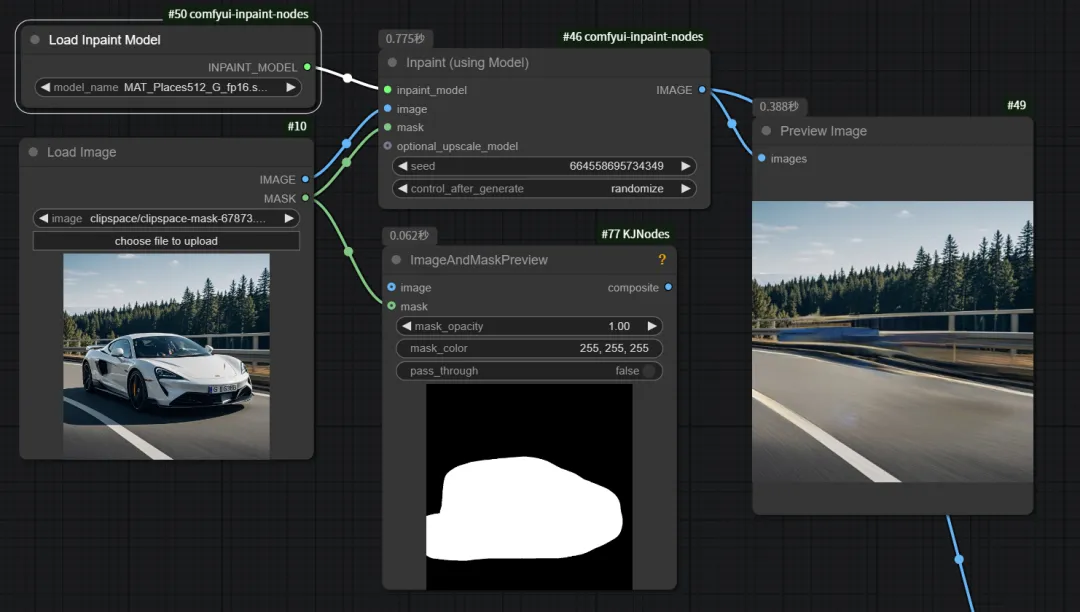

¶ Scenario: Removing a Car

- Initial Workflow: Using basic inpainting with the prompt "empty space" resulted in the removal of the car, but the road was replaced by an empty patch of land.

- Refined Workflow: Incorporating advanced preprocessing with Inpaint (using Model) delivered significantly improved results, retaining the road texture.

¶ Model Comparison for Preprocessing:

-

big-lama.pt: Best performance with minimal artifacts.

-

MAT_Places512_G_fp16.safetensors: Decent results but more residual artifacts.

-

Places_512_FullData_G_MAT.pth: Similar to MAT_Places512 but less effective.

¶ Conclusion: Choosing the Right Inpainting Method

When it comes to inpainting, Shakker Online ComfyUI provides versatile tools to suit diverse needs.

-

For simplicity and seamless blending, both VAE Encode (for Inpainting) and Set Latent Noise Mask are reliable choices.

-

For advanced precision and challenging tasks, tools like Fooocus and IPAdapter offer unparalleled flexibility and quality.

¶ Unlock the Power of Shakker Online ComfyUI

Whether you're an AI artist, designer, or creative enthusiast, explore Shakker Online ComfyUI's inpainting methods today to take your image editing to the next level!