¶ TensorFlow: A Beginner's Guide to Deep Learning and AI

Deep learning and AI have transformed countless industries, from healthcare to entertainment, and one of the most powerful tools fueling this revolution is TensorFlow. Developed by Google, TensorFlow is an open-source framework designed for creating machine learning and deep learning models. It has become a cornerstone for applications like computer vision, natural language processing, and predictive analytics.

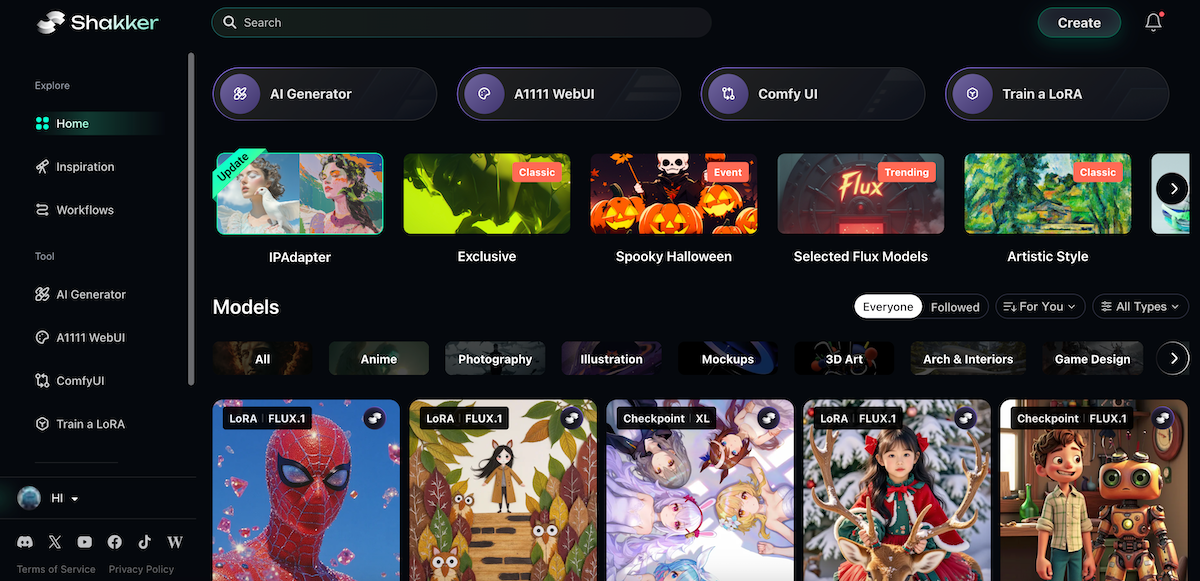

TensorFlow’s comprehensive ecosystem provides flexibility for both research and production environments, making it a popular choice among developers and data scientists. It’s also often compared with PyTorch, its closest rival in the deep learning space (torch vs TensorFlow). Complementing this ecosystem, platforms like Shakker AI provide advanced tools for image generation, model training, and data augmentation, enabling a seamless AI workflow.

Let’s dive deeper into what TensorFlow is, compare it with PyTorch, and explore how it works in practice for AI applications.

¶ What Is TensorFlow?

At its core, TensorFlow is an open-source framework for building and deploying machine learning models. Developed by Google Brain, it provides a robust set of tools for constructing neural networks and automating machine learning processes. Its name reflects its core functionality—handling tensors (multi-dimensional arrays) and performing computations on them using computational graphs.

¶ Key Components of TensorFlow:

-

TensorFlow Hub: A repository of pre-trained models that can be reused across various projects.

-

TensorFlow Lite: Optimized for mobile and embedded devices, allowing lightweight deployment of ML models.

-

TensorFlow.js: A JavaScript library for running ML models directly in web browsers.

¶ Applications of TensorFlow:

TensorFlow’s versatility extends to:

-

Neural Networks: Building deep learning architectures for tasks like object detection and language translation.

-

Data Augmentation: Enhancing datasets to improve model accuracy.

-

Predictive Analytics: Forecasting trends and behaviors in industries like finance and healthcare.

For instance, TensorFlow is widely used for speech recognition and image classification, making it an indispensable tool for AI practitioners. Its ability to scale from small projects to enterprise-grade solutions is one of the reasons behind its popularity.

¶ TensorFlow vs PyTorch

When discussing deep learning frameworks, the debate often boils down to TensorFlow vs PyTorch. While both are powerful, they cater to slightly different audiences and use cases.

¶ What is PyTorch?

PyTorch, developed by Facebook, is a dynamic computation framework that emphasizes flexibility and simplicity. It’s particularly popular in research due to its ease of experimentation.

¶ Key Differences: TensorFlow vs PyTorch

| Feature | TensorFlow | PyTorch |

|---|---|---|

| Ease of Use | Comprehensive ecosystem, steep learning curve | Intuitive, easier for beginners |

| Community Support | Extensive, with tools like TensorBoard | Rapidly growing, strong academic presence |

| Performance | Optimized for production-grade models | Suited for quick prototyping |

| GPU Support | Excellent, with TensorFlow-GPU | Strong, but less seamless |

| Dynamic Computation | Static computation graphs (eager mode available) | Fully dynamic computation graphs |

¶ Recommendation:

-

Choose TensorFlow if you need a production-ready framework with scalability and a rich ecosystem.

-

Opt for PyTorch if you prioritize flexibility and rapid prototyping.

For tasks like image generation and real-time applications, TensorFlow can be complemented by tools like Shakker AI, which offers advanced workflows for AI projects.

¶ How to Install TensorFlow

Setting up TensorFlow is straightforward, whether you’re using a CPU or GPU.

¶ Step-by-Step Installation:

Install TensorFlow via pip (CPU version):

bash

pip install tensorflow

Install TensorFlow with GPU support:

bash

pip install tensorflow-gpu

Verify Installation:

python

import tensorflow as tf

print(tf.__version__)

Enable GPU TensorFlow:

python

physical_devices = tf.config.experimental.list_physical_devices('GPU')

tf.config.experimental.set_memory_growth(physical_devices[0], True)

¶ Troubleshooting Tips:

-

Ensure your system meets CUDA compatibility requirements.

-

Update GPU drivers and install cuDNN for optimal performance.

By integrating TensorFlow with platforms like Shakker AI, users can leverage image generation tools, such as inpainting and outpainting, to enrich their AI workflows.

¶ TensorFlow Tutorial for Beginners

Let’s explore how to create a basic neural network in TensorFlow.

¶ Building a Simple Neural Network:

Import TensorFlow:

python

import tensorflow as tf

Define the Model:

python

model = tf.keras.Sequential([

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

Compile the Model:

python

model.compile(optimizer='adam',

loss='sparse\_categorical\_crossentropy',

metrics=\['accuracy'\])

Train the Model:

python

model.fit(x_train, y_train, epochs=5)

¶ Explanation:

-

Dense layers define the number of neurons and activation functions.

-

Optimizer controls the learning rate and convergence.

-

Loss function evaluates the model’s performance during training.

¶ Enhancing the Workflow with Shakker AI:

For advanced image preprocessing or data augmentation, TensorFlow users can leverage Shakker AI’s Canvas tools to prepare datasets, ensuring better model accuracy.

¶ TensorFlow in Practice: GPU TensorFlow

One of TensorFlow’s standout features is its ability to utilize GPUs for accelerated training.

¶ Why Use GPUs?

GPUs parallelize computations, drastically reducing training time for large datasets and complex models.

¶ Enabling GPU in TensorFlow:

Install TensorFlow with GPU support:

bash

pip install tensorflow-gpu

Enable GPU functionality:

python

physical_devices = tf.config.experimental.list_physical_devices('GPU')

tf.config.experimental.set_memory_growth(physical_devices[0], True)

¶ Compatibility:

TensorFlow supports NVIDIA GPUs with CUDA, ensuring seamless integration with modern hardware.

¶ Shakker AI Integration:

Platforms like Shakker AI complement TensorFlow’s GPU capabilities with tools like the A1111 WebUI for real-time image rendering and model testing, making the workflow efficient for developers.

¶ Shakker AI and TensorFlow for Advanced Applications

For developers looking to elevate their TensorFlow projects, Shakker AI offers unparalleled tools for AI model creation and testing.

¶ Features That Complement TensorFlow:

-

Custom Mode Generation: Customize prompts, models, and parameters for image generation and data augmentation.

-

Canvas Tools: Upscale, crop, inpaint, and create collages to preprocess images before TensorFlow training.

-

Online Training Features: Build TensorFlow-compatible models directly within Shakker AI.

-

Active Model Community: Access pre-trained models for anime, illustrations, and game assets, reducing development time.

By combining TensorFlow’s scalability with Shakker AI’s powerful features, developers can streamline their workflows and focus on innovation.

¶ Conclusion

TensorFlow remains a cornerstone framework for deep learning and AI applications, offering versatility and scalability for a range of tasks. Whether you're building neural networks, leveraging GPUs for training, or exploring AI innovations, TensorFlow provides a robust ecosystem to meet your needs.

When paired with platforms like Shakker AI, TensorFlow’s capabilities are amplified, enabling seamless workflows for image generation, data preprocessing, and model training. Start your journey with TensorFlow today and unlock the potential of deep learning in your projects.