¶ Multimodal AI: Meaning, Generative Tools, and Examples

Multimodal AI is revolutionizing the field of artificial intelligence by enabling systems to process and integrate multiple data types, such as text, images, audio, and video. This groundbreaking technology is reshaping AI research and applications, providing smarter and more context-aware solutions. By combining diverse data modalities, multimodal AI allows machines to deliver intelligent outputs, paving the way for innovative applications across various industries.

¶ What is Multimodal AI?

What is multimodal AI? At its core, multimodal AI refers to the ability of AI systems to process, analyze, and integrate multiple modes of data—such as text, images, video, and audio—to perform complex tasks. Unlike single-modal systems that work within a single type of input, multimodal AI meaning emphasizes its advanced ability to create enriched and contextually accurate outputs.

For instance, conversational agents powered by multimodal generative AI can understand both spoken commands and accompanying images to provide tailored responses. Similarly, vision-language models, like OpenAI’s DALL-E, combine text and visual data to generate high-quality images based on user prompts. By uniting different data types, multimodal AI significantly enhances machine understanding and output accuracy, making it a cornerstone of the future AI landscape.

¶ Multimodal AI Learning Examples

The field of multimodal AI is rapidly evolving, with remarkable advancements in multimodal learning examples showcasing its transformative potential. Below are some of the most notable examples of how this technology is being applied:

- GPT-4V(ision): An enhanced version of GPT-4 capable of processing both text and images, enabling seamless generation of visual content.

- Inworld AI: Creates intelligent and interactive virtual characters for gaming and digital worlds.

- Runway Gen-2: A dynamic tool that generates videos from text prompts.

- DALL-E 3: An OpenAI-based image generator that produces stunning visuals from textual descriptions.

- ImageBind by Meta AI: Processes six data modalities—text, image, video, thermal, depth, and audio—to deliver versatile outputs.

- Google’s Multimodal Transformer (MTN): Combines audio, text, and image data to produce captions and descriptive video summaries.

¶ Industry Applications

- Healthcare: Multimodal AI assists in diagnostics by analyzing patient data from multiple sources, such as X-rays, reports, and medical histories.

- Entertainment: It enhances gaming experiences by creating lifelike characters and immersive virtual worlds.

- Education: Enables personalized learning by integrating text, videos, and interactive content.

By leveraging diverse data sources, multimodal AI learning examples highlight its capability to transform traditional workflows and create sophisticated solutions.

¶ Multimodal Generative AI Tools

The emergence of multimodal generative AI tools has unlocked new possibilities for creative professionals, researchers, and developers. Below are some of the cutting-edge tools in the field:

- Google Gemini: Combines text, images, and other modalities to generate, enhance, and understand diverse content.

- Vertex AI: Google Cloud’s machine learning platform excels in tasks like image recognition and video analysis.

- OpenAI’s CLIP: Processes text and images to perform tasks like image captioning and visual search.

- Hugging Face’s Transformers: Supports multimodal learning by integrating audio, text, and image data to build versatile AI models.

¶ Shakker AI – A Pioneer in Multimodal Generative AI

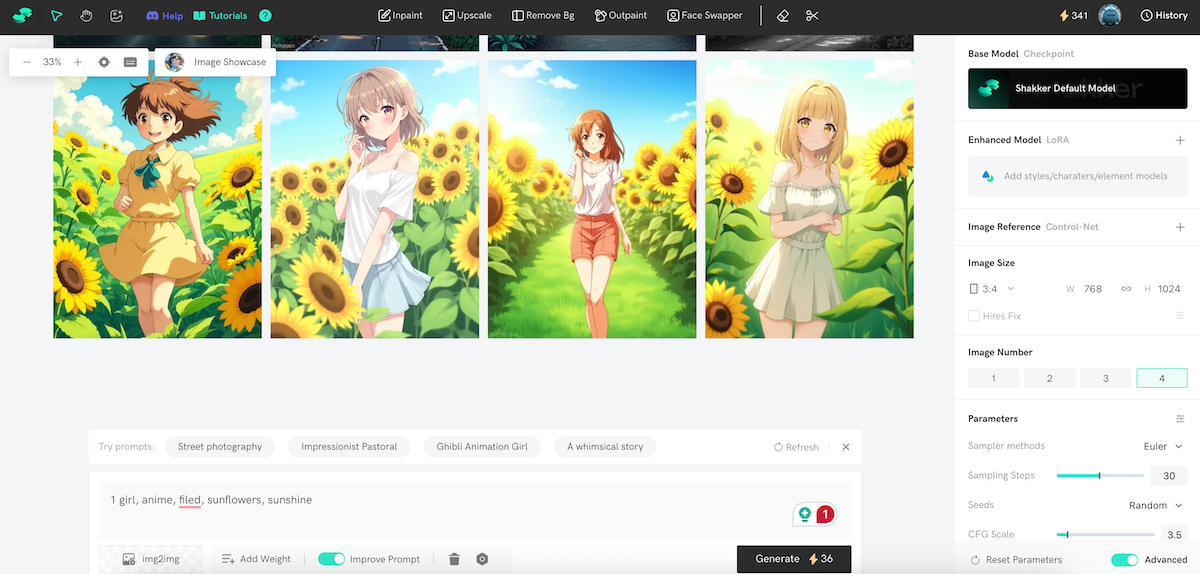

Among the leaders in multimodal generative AI, Shakker AI stands out for its advanced features and user-centric tools:

¶ Shakker AI Generator Tools

- Custom Modes: Includes prompt-based generation, image-to-image transformations, and advanced settings like samplers and adetailers.

- Canvas Features:

- Inpainting: Edit parts of an image seamlessly.

- Outpainting: Expand image boundaries while maintaining context.

- Cropping and Collage Creation: Refine and combine visuals effortlessly.

¶ Built-in Online Tools

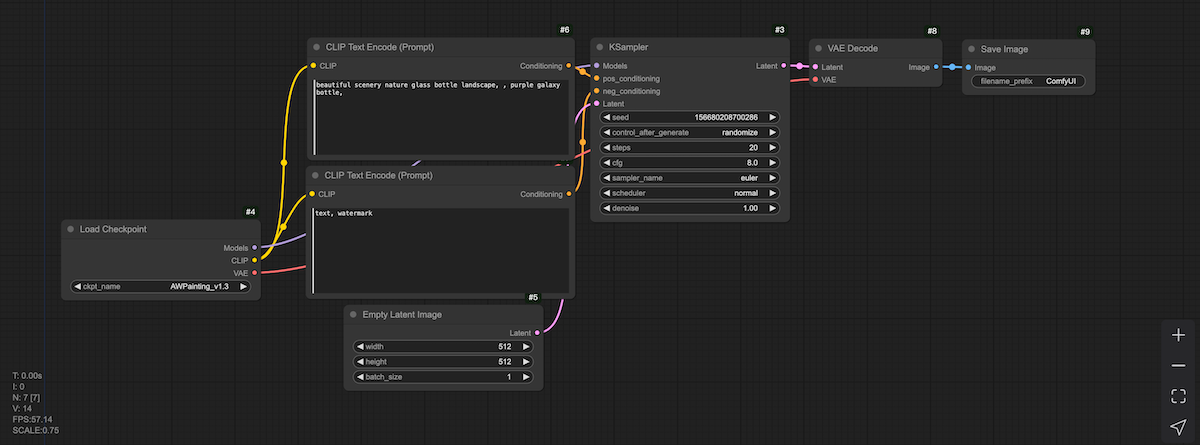

- A1111 WebUI: Ensures seamless customization.

- ComfyUI: Provides flexible workflow management.

¶ Training Tools

Shakker AI empowers users to refine models for specific needs, ensuring personalized and high-quality outputs.

These tools showcase how multimodal AI is revolutionizing content creation, gaming, and other real-world applications, making it an essential technology for the future.

¶ Why Multimodal AI Matters

Multimodal AI is a game-changer for advancing AI solutions across various domains:

- Better Context Understanding: By integrating diverse data modalities, systems can interpret information with greater accuracy and relevance.

- Enhanced Generative Capabilities: Multimodal generative AI drives innovation in industries like entertainment, marketing, and education.

- Boosted Creativity and Productivity: Businesses and individuals can create sophisticated outputs efficiently, unlocking new possibilities in content creation.

By addressing these challenges, multimodal AI meaning becomes clear: it is the next step in AI evolution, enhancing both usability and functionality.

¶ Final Thoughts

In conclusion, multimodal AI represents a significant leap forward in AI research and applications. By integrating multiple data types, it creates intelligent, contextually rich solutions for industries ranging from healthcare to entertainment. Tools like Shakker AI exemplify the potential of multimodal generative AI, offering advanced features like inpainting, outpainting, and personalized model training. As this technology continues to evolve, it is set to revolutionize how we interact with AI, paving the way for unprecedented innovation. Explore tools like Shakker AI to harness the full potential of this groundbreaking technology.